Contextual Relevance vs Noise

The hidden battle inside every RAG system

If you have been around large language models for more than five minutes, you have probably noticed something weird.

They can sound smart, confident, and personal, but the moment you ask a question that depends on history, the model starts to drift. It forgets what you said earlier. It contradicts itself. It gives answers that feel close, but not quite right.

That is not because the model is lazy. It is because most LLMs are basically stateless. They do not carry your full conversation around like a human does. They respond based on what is inside the prompt right now.

That is where RAG comes in.

RAG stands for Retrieval Augmented Generation. The simple idea is: do not rely on the model’s memory alone. Give it a memory system. When a user asks something, the system retrieves relevant context from an external store, then includes that context in the prompt so the model can respond with grounding and continuity. This idea has been formalized in the research literature for a while now, especially in the early RAG work that combines a language model with a retriever that pulls relevant passages from a knowledge base.

In practice, most modern RAG systems work like this:

-

Take a question or message

-

Convert it into an embedding

-

Search a vector database for similar meaning

-

Pull back the top results

-

Feed those results into the model as context

-

Ask the model to answer using that context

Vector search is what makes this possible. Instead of searching by exact keywords, you search by meaning. That is why vector databases and vector search have become the default building block for RAG.

Now here is the part that gets interesting.

RAG does not magically solve memory. It just moves the problem upstream into retrieval. And inside retrieval there is a constant tradeoff that shows up in every system I have worked on:

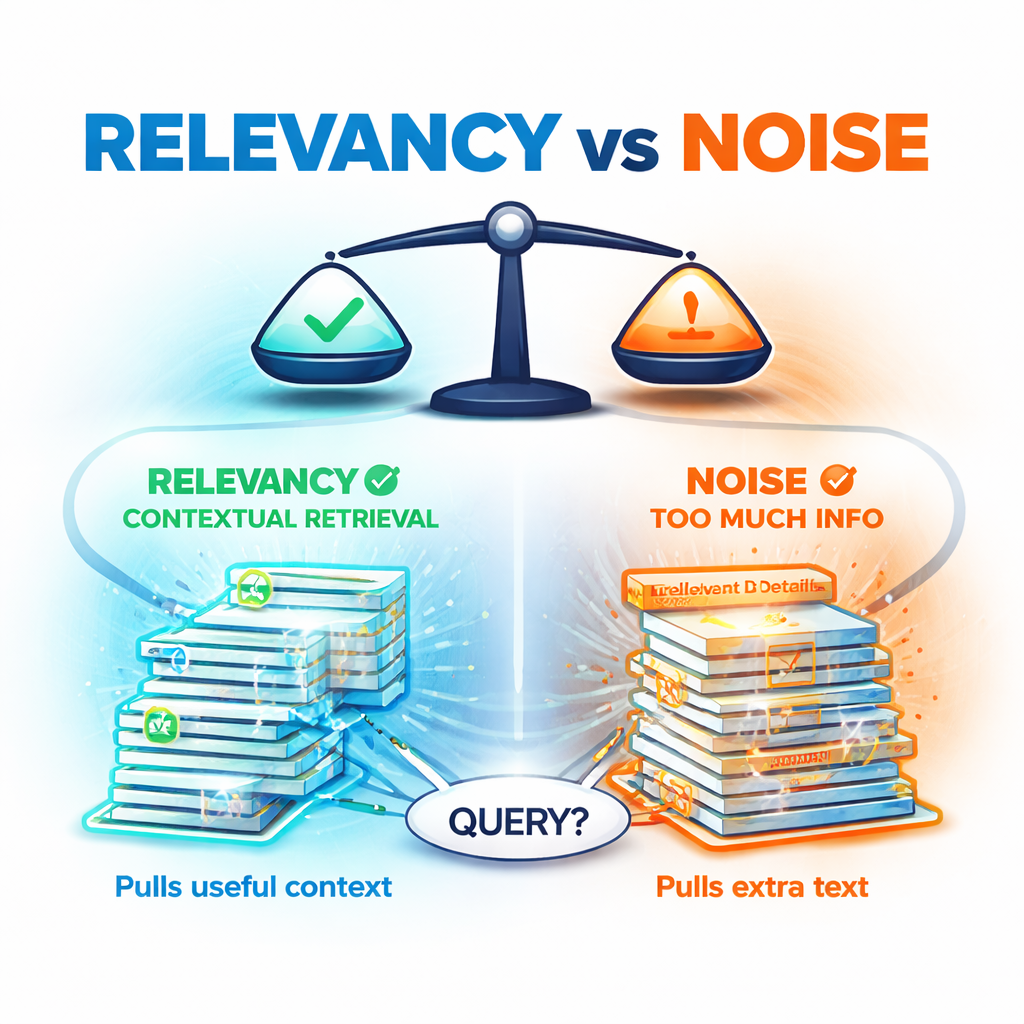

contextual relevance vs noise

What contextual relevance means

Contextual relevance is when the retrieved context helps the model do the job better. It gives the model the right facts, the right preferences, the right constraints, or the right history. It reduces guessing. It reduces hallucinations. It makes the output feel like it “remembers.”

A good RAG answer feels like talking to someone who was actually listening.

What noise looks like

Noise is everything else.

Noise is retrieved text that is technically related but not useful. It is background details, unrelated topics, outdated information, repeated content, or irrelevant tangents.

Noise is dangerous because the model treats anything you provide as signal. If you feed it a large blob of context, it will often try to incorporate it, even when it should ignore it. The result is answers that are less precise and sometimes simply wrong.

This is not just theory. We have research showing that models do not reliably use long context well. Performance can drop when relevant information is buried in the middle of a long input, even when the context window is large.

So the goal is not “more context.”

The goal is “better context.”

Chunking is where relevance vs noise gets decided

In most RAG systems, you store information as chunks. A chunk is just a snippet you save and retrieve later.

Choosing chunk size sounds like a boring implementation detail. It is not. Chunking is one of the biggest levers you have for relevance vs noise.

Here is the tradeoff:

Small chunks

-

Pros: more precise retrieval, less unrelated text, less noise

-

Cons: you may lose meaning that depends on surrounding sentences, you retrieve fragments, contextual relevance can drop

Large chunks

-

Pros: more complete thought units, more surrounding meaning, higher chance the answer is inside the retrieved chunk

-

Cons: you pull extra baggage, noise goes up, and the model may latch onto the wrong part of the chunk

This is why chunking guidance often frames the goal as “big enough to be meaningful, small enough to stay precise.”

If you are building RAG for conversation history, this gets even harder. Conversations are messy. People change topics. Preferences evolve. Old context can conflict with new context. Large chunks can easily mix multiple topics and introduce contradictions.

A simple mental model

When I explain this to non technical folks, I use this model:

Contextual relevance is like handing the model a sticky note with the one thing it needed to remember.

Noise is like handing the model your entire notebook and saying, “it is in there somewhere.”

Both contain the truth. Only one helps you act.

Practical ways teams reduce noise

There is no single perfect chunk size. It depends on your domain, your user behavior, and what “memory” means for your product. But there are patterns that consistently help:

-

Use overlap. Small chunks with slight overlap preserve meaning without turning into giant blobs.

-

Retrieve less, then rerank. Pull a wider set, then use a second step to rank by contextual relevance.

-

Store summaries alongside raw text. Summaries often retrieve better than verbatim chat logs.

-

Time weight your memory. Recent context often matters more than old context.

-

Measure it. Create a small test set of real questions and score answers for usefulness, correctness, and continuity.

And most importantly, treat retrieval like a product surface. Because it is. If retrieval is noisy, your model will feel “forgetful” or “random,” even if the model is strong.

The takeaway

RAG is powerful because it gives stateless models a practical way to act like they remember. It is also increasingly common across industry because it helps ground answers and reduce hallucinations.

But the real work is not just adding RAG. The real work is tuning retrieval so the model gets contextual relevance without drowning in noise.

If you are building with RAG, you are not only designing prompts. You are designing memory. And memory is a precision game.