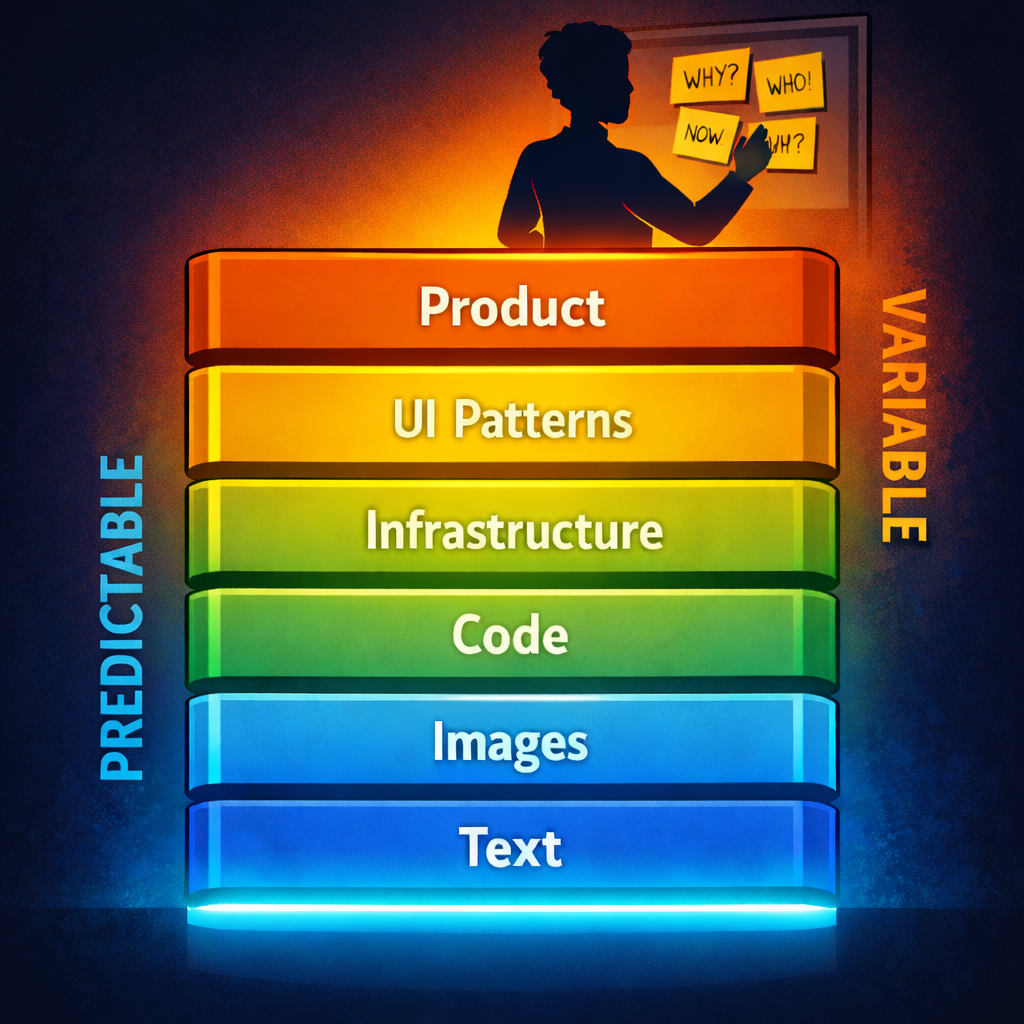

Why AI wins where things are predictable, and why product is still the hard part

I have been thinking a lot about why AI shows up where it does first.

At the core, LLMs are stochastic. They generate the next token by assigning probabilities to what could come next, then selecting from that distribution. That is a simple idea with a big impact, because it means these systems thrive where patterns are strong, repeatable, and heavily represented in the data.

Once you see it that way, the adoption curve makes more sense.

AI moves fastest in the parts of work that are easiest to predict.

Text is full of repeatable structure. Images have patterns too, especially when you are talking about common compositions and styles. Code is highly structured and surprisingly repetitive. Infrastructure has conventions. UI patterns repeat across products. QA and scaffolding have checklists, templates, and known failure modes. Those layers are predictable, so models get good there fast. And the better the model gets, the more we start treating those layers like commodities.

That is what I mean when I say the stack is flattening.

Not because engineering goes away, but because more of the “how” becomes easier to generate, modify, and iterate on quickly. The effort shifts upward from typing to directing. From assembling pieces to shaping systems. You can see the SDLC compressing in real time, with AI influencing much more than just code generation.

But product is different.

Product lives in human context.

What to build. Why it matters. Who it is for. What problem is actually worth solving. How it should feel. What tradeoff is acceptable right now. What risk is not acceptable ever.

That is the highest variability in the stack.

Even when you have data, you still have judgment. Two teams can look at the same dashboard and make completely different calls. One might optimize for growth, another for retention, another for trust. That is not a bug. That is the work.

And it explains why the most impressive AI gains often show up first in areas that look like structured transformation. Summarize this. Draft that. Generate the code. Fix the tests. Refactor the function. Those are all real wins, but they live closer to the predictable layers.

Product work has fewer “correct” answers.

It has more “it depends.”

So where does that leave us?

I think it creates a durable advantage for product people who know how to work with AI, not just around AI.

If you can translate intent into clear prompts, guide agents, run small experiments, and learn from real users, you are in a strong position. Not because AI replaces product judgment, but because AI amplifies it. Your ability to pick the right direction becomes more valuable when execution gets cheaper and faster.

Here is the shift I keep seeing:

1) The bottleneck moves from building to deciding

When it takes weeks to ship something, it is easy to hide behind process. When it takes hours, you cannot. You are forced to get crisp on what matters.

2) The cost of being wrong drops

Fast iteration changes the emotional math. You can try more things. You can test smaller ideas. You can learn earlier. That favors people who are comfortable with experimentation and feedback loops.

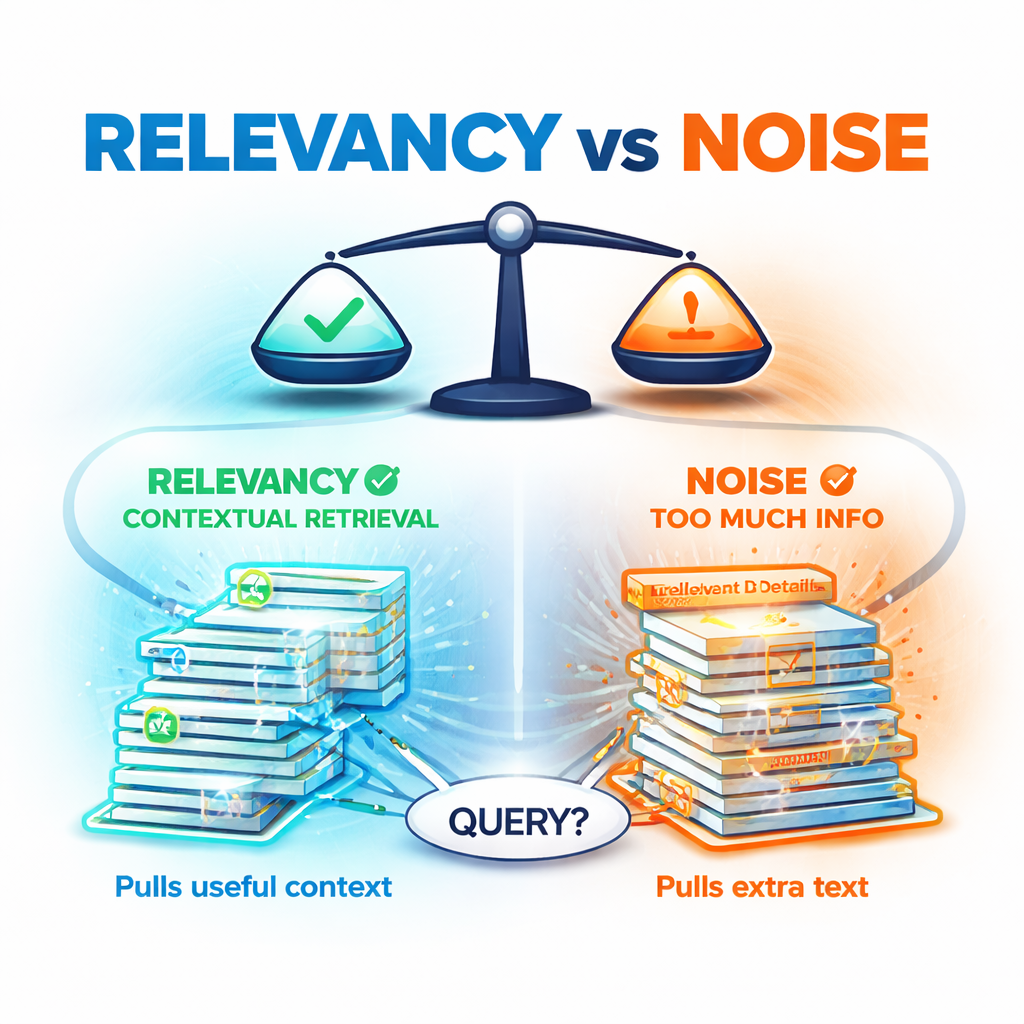

3) The cost of being unclear goes up

AI is a multiplier, but it multiplies what you give it. Clear intent produces leverage. Vague intent produces noise.

This is also why I think product managers end up closer to the center of agentic systems than people expect. Someone has to define the job, set the guardrails, decide what “good” looks like, and design the feedback loop that makes the system better over time. Even large tech leaders are saying some version of this out loud, especially as agents become more capable and more autonomous.

Now, I want to be honest about one more thing.

Just because the lower layers are predictable does not mean they are “solved.” Predictable does not mean safe. We are already seeing that AI can produce code that looks clean but carries quality or security risk, and it can increase the review burden if teams do not adjust how they validate and ship. Speed is real, but so is the need for good judgment and good guardrails.

That brings me back to the main point.

AI climbs the stack by eating predictability.

Text, images, code, infrastructure, UI patterns, scaffolding, QA. Those domains are pattern rich. They are repeatable. They are easier for probabilistic systems to model.

Product is pattern rich too, but the patterns are messier because humans are messier. Context changes. Culture changes. The market moves. What users say they want is not always what they need. And what matters is not always what measures well.

That is why I keep coming back to this framing:

The stack is getting flatter, but meaning is still the top layer.

And meaning is still very human.